Contents

The sample dimension is the variety of scores in each pattern; it is the variety of scores that goes into the computation of every mean. Because statisticians know a lot in regards to the regular distribution, these analyses are a lot simpler. The theorem explains why the normal distribution appears so frequently in nature. Many natural portions are the results of several independent processes. In either case, it does not matter what the distribution of the original population is, or whether you even need to know it.

This Central Limit Theorem definition does not quite explain the meaning and purpose of the theorem to a layperson. It simply says that with large sample sizes, the sample means are normally distributed. To understand this better, we first need to define all the terms. The standard error in statistics is the standard deviation of the sampling distribution.

Application of Central Limit Theorem in Supply Chain: An Illustration

Μ we dont know, but we can find Lower Limit & Upper Limit value such that the area under the curve between these 2 limit is 90%. As n increases, the normal distribution is reached very quickly. It needs to be remembered that it is, in fact, the number of data points in each sample.

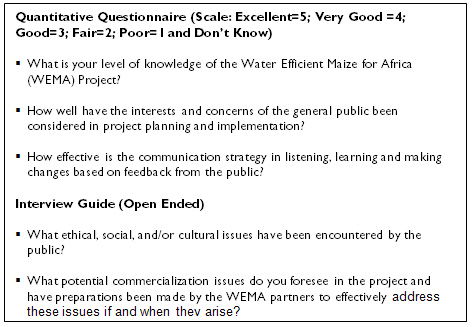

According to experts, there are several applications of the central limit theorem. We have created a table of those applications and that table is mentioned below. If the sampling is done without any replacement, then the sample size should not exceed 10% of the total population.

This article does not walk you through the proof of CLT, but it illustrates the definition and application of CLT. Data science or analytics, then a comprehensive course with live sessions, assessments, and placement assistance might be your best bet. For the sake of practicality, she takes a sample of 10% of users over a period of 1 week to measure the time spent on the home page. However, since this is a random sample, the average will vary with every sample that is selected. Sourabh has worked as a full-time data scientist for an ISP organisation, experienced in analysing patterns and their implementation in product development.

- It states that «As the sample size becomes larger, the distribution of sample means approximates to a normal distribution curve.»

- The standard deviation is a measure of diversity that is used to determine how far apart values tend to be from the mean.

- But since here our population’s standard deviation is not given, so, I can make this formula as sample’s standard deviation, under root by sample size.

- Thankfully, one can use the central limit theorem to make his or her calculations easy.

- This is population standard deviation divided by sample size, which means it will be equivalent to sigma upon under root n.

The central limit theorem can also be explained as the distribution of a sample mean which approximated the normal distribution. The assumption here is that all the samples are similar and the shape of the population distribution could possibly be anything. Every statistician or a data scientist’s dream is to see their population is normally distributed! CLT is applicable to almost any probability distributions that we know so far with finite variances expect for Cauchy’s distribution which has an infinite variance. You are going to see in a moment how this simple but elegant theorem forms the most critical pillar in making inferences in applied statistics and machine learning. The standard deviation of the sampling distribution also known as thestandard erroris equal to the population standard deviation divided by the square root of the sample size.

As the first step, she has to decide which metric to use as a measure of engagement. This leads to the hypothesis that the homepage is engaging if the average time spent on it is over 7 minutes. Let’s use a real-world data analysis problem to illustrate the utility of the Central Limit Theorem. Say a data scientist at a tech startup has been asked to figure out how engaging their homepage is. In other words, you need to quantify the likelihood that you’re seeing the data due to chance and not due to your hypothesis. To understand the likelihood of seeing the same data even if your hypothesis is wrong.

The wonderful and counter-intuitive thing about the central limit theorem is that no matter what the form of the original distribution, the sampling distribution of the imply approaches a traditional distribution. Furthermore, for many distributions, a normal distribution is approached very quickly as N will increase. The first step in improving the quality of a product is often to identify the most important components that contribute to unwanted variations. If these efforts succeed, then any residual variation will typically be brought on by numerous elements, appearing roughly independently. In different phrases, the remaining small amounts of variation may be described by the central restrict theorem, and the remaining variation will sometimes approximate a standard distribution. For this purpose, the traditional distribution is the basis for many key procedures in statistical quality management.

What is A Central Limit Theorem | Central Limit Theorem in R

While we can’t obtain a height measurement from everyone in the population, we can still sample some people. The question now becomes, what can we say about the average height of the entire population given a single sample. We can develop multiple independent evaluations of a model accuracy to result in a population of candidate skill estimates. The mean of these skill estimates will be an estimate of the true underlying estimate of the model skill on the problem.

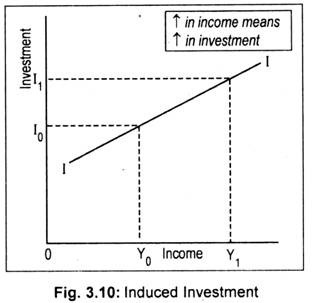

In addition, the skew and the kurtosis of every distribution are also offered to the left. The figure below illustrates a normally distributed characteristic, X, in a population in which the population mean is 75 with a standard deviation of 8. The mean of the population is approximately equal to the mean of the sampling distribution. We can see this in the example above where population mean( 98.87 ) is approximately equal to the mean of sampling distribution (98.78). Suppose that are i.i.d. random variables with expected values and variance . Do you know about all the different applications of the central limit theorem?

It should be noted that the larger the value of the sample size, the better quality the approximation to the normal. This is an amazing insight that allows us to perform hypothesis testing and derive useful statistical inferences even when your population data is not normally distributed provided you have a sufficiently large sample size. For your estimate to be very precise, the mean of the sample distribution is more likely to be very close to the population mean.

In this article, we will look at the central limit definition, along with all the major concepts that one needs to know about this topic. The central limit theorem can be explained as the mean of all the given samples of a population. This is an approximation if the sample size is large enough and has finite variation. As you can see, the Central Limit Theorem is a very powerful theorem and is helping us to derive a lot of insights.

Central restrict theorem

Normal distributions are continuous probability distributions that are symmetrically distributed around their mean. Most observations tend to cluster around the central peak and probabilities for values near and far from the mean taper off equally. Extreme values in either tail of the distribution are similarly unlikely. Generally, the normal distribution is symmetrical but not all symmetrical distributions are normal. So, in this module, we have covered how sample distribution, creates a base for central limit theorem.

That means you think they sell between 200 and 300 cans an hour. So, in this way this confidence interval and this confidence range will be extremely useful in the coming modules. Now, we can also call it… we have seen the formula of confidence interval. There’s one interval that is created, which we call as confidence interval. So, in that there are three important properties like my sampling distribution’s mean mew x bar is equivalent to my population mean, which means mew. The size of the sample,n, that is required in order to be “large enough” depends on the original population from which the samples are drawn .

Sample Mean Vs Population Mean

Mathematically it could be explained as the standard deviation of the sample divided by the size of the sample is the standard error. Thecentral limit theoremis one of the most important results in probability theory. It states that, under certain conditions, the sum of a large number of random variables is approximately https://1investing.in/ normal. Here, we state a version of the that applies to i.i.d. random variables. The Central Limit Theorem has several important implications for statistical analysis. For example, it can be used to justify the use of the normal distribution as a model for the sampling distribution of the sample mean.

Then stop dreaming yourself, start taking Data Science training from Prwatech, who can help you to guide and offer excellent training with highly skilled expert trainers with the 100% placement. Follow the below mentioned central limit theorem in data science and enhance your skills to become pro Data Scientist. Formally, it states that if we sample from a population using a sufficiently large sample size, the mean of the samples will be normally distributed . What’s especially important is that this will be trueregardlessof the distribution of the original population.

The central restrict theorem tells us that if there are enough influencing factors, then the ultimate amount will be approximately usually distributed. It additionally justifies the approximation of enormous-pattern statistics to the traditional distribution in managed experiments. And it doesn’t central limit theorem in machine learning simply apply to the sample imply; the CLT can be true for other sample statistics, such as the pattern proportion. Suppose that we are interested in estimating the average height among all people. Collecting data for every person in the world is impractical, bordering on impossible.

If we take the square root of the variance, we get the usual deviation of the sampling distribution, which we name the standard error. The central restrict theorem tells us exactly what the form of the distribution of means shall be after we draw repeated samples from a given inhabitants. Specifically, as the sample sizes get bigger, the distribution of means calculated from repeated sampling will strategy normality. What makes the central limit theorem so outstanding is that this end result holds no matter what shape the original inhabitants distribution may have been. According to the central restrict theorem, the means of a random pattern of dimension, n, from a population with imply, µ, and variance, σ2, distribute usually with imply, µ, and variance, σ2n.

Sampling simply means picking out or selecting random values from the given curve based on their probabilities. For example, suppose we want the computer to pick one random value, there are high chances that it will pick a value from the tallest part of the curve. How ever at some times, it may also pick values from the lower ends of the curve. Usually the sample size is specified for the computer to generate random samples from the histogram or curve. The sample size denotes the number of entries that a sample should contain.

The central restrict theorem states that the sampling distribution of the mean of any impartial, random variable might be regular or almost regular, if the sample size is large enough. As a common rule, sample sizes equal to or larger than 30 are deemed enough for the CLT to hold, which means that the distribution of the sample means is fairly usually distributed. Therefore, the extra samples one takes, the extra the graphed outcomes take the shape of a normal distribution. Furthermore, all of the samples will observe an approximate normal distribution pattern, with all variances being approximately equal to the variance of the population, divided by each sample’s measurement. If this process is performed many occasions, the central restrict theorem says that the likelihood distribution of the common will closely approximate a standard distribution.